Difference between revisions of "Translated articles in the CrossRef database"

VictorVenema (talk | contribs) (Some raw text) |

VictorVenema (talk | contribs) (Text edit.) |

||

| (8 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | CrossRef is an organization of scientific publishers that manages the Digital Object Identifiers given to scientific articles. | + | CrossRef is an organization of scientific publishers that manages the Digital Object Identifiers given to scientific articles. As a consequence they have a large database with scientific works, some of which are or have translations. [http://api.crossref.org The CrossRef database has an API] from which we can request the records of works that are (filter=relation.type:is-translation-of) or have (filter=relation.type:has-translation) translations. The code we used to download and analyse the data can be found on our [https://codeberg.org/translate-science/Analyze_Translations_CrossRef_Database/ Codeberg GIT repository]. This is not a scientific study, some of the results below (especially the ones pointing to problems) may no longer be produced (as the problems have been fixed). |

| − | + | == Results == | |

| + | CrossRef currently knows 4142 translations, while only 2152 works have a translation. This makes sense as translated articles are often translated into many languages, although it could have been different as translations quite commonly do not have a DOI, but are only linked to with a URL, and thus do not have an entry themselves in the CrossRef database. | ||

| − | + | Publishers that publish translations tend to translate many. On average a publisher that publishes translations publishes 72 translations and the number of publishers that have more than 100 translations is only seven. Similarly an average journal that publishes translations publishes 36 translations. | |

| − | + | === Translations by year === | |

| + | [[File:Histogram_isTranslation.png|left|frame|The number of translations per year.]] | ||

| + | <br clear=all> | ||

| + | The graph with the number of translations published per year has some suspicious peaks at both ends, but this seems to be okay. The peak in 2021 is due to a large number of articles entered into the record by [https://www.pleiades.online/ Pleiades Publishing], which specializes in making translations. While the peak in the first year, 2008, is due to an [https://www.imaios.com/en/e-Anatomy/ anatomical atlas], which was published in 9 languages and was split in many parts. | ||

| − | + | [[File:Histogram hasTranslation.png|left|frame|The number of works per year that have a translation]] | |

| + | <br clear=all> | ||

| + | Also the peak in the first year of the graph for works that have translations is due to the anatomical atlas. | ||

| − | + | === Where are the translations? === | |

| + | Many works that have a translation point to a URI (generally a URL), not a DOI. So further information on these translations is not in the CrossRef database. Especially when you look at the number of translations (rather than the number of translated works) URIs are common. Works pointing to an URI tend to have multiple translations. Information on these translations (language, title, translator, ...) is not available and will have to be scrapped. | ||

| − | + | The works that have a translation point to a DOI 1466 times and to a URL 750 times (when they pointed to both, I have assumed these were double and only counted the DOIs). The 1466 works pointing to a DOI link to 2311 translations with a DOI, while the 750 works that point to a URL link to 5987 translations. This is to a large part due to the anatomical atlas, which is available in nine languages. | |

| − | + | CrossRef has 4231 articles, which are marked as being a translation. They tend to point to an original with a DOI (4217 times), while only 14 point to an original with a URI. These originals with a DOI should mostly be in the CrossRef database (except if the DOI is wrong), but only 1256 of these were in my dataset of articles that have a translation. In this case, with a few exceptions, the publisher of the original and the translation are the same. It looks like the person uploading the data for the translation does not have permission to also update the information of the original (by another publisher), but has to contact the publisher of the original and ask them to enter the new additional information. | |

| − | + | The records that have or are translations point to 6339 documents with a DOI that are or have translations. We downloaded these records to get more information on the linked articles. Of these 6339 DOIs 215 DOIs were apparently wrong, the CrossRef database did not return any data for them. Although we found by coincidence that in one case doi.org did return a result; so maybe this was a DataCite DOI; is there a way to see where a DOI is registered? | |

| − | + | To study how many publishers are involved we used the "publisher prefix" of the DOI (the part before the first "/") and not the metadata publisher name as the name can be written in many ways. Theoretically the publisher of an article can change, in this case we would analyse the first publisher of the articles, not the current one. We found that 75 publishers published the originals, 57 publishers publish translations and 83 publishers published either originals or translations. Furthermore, there are only 84 pairs of publishers, where a pair would be one publisher of an original together with the publisher of a translation. In most cases publishers publish their own translations; for 3769 of 6282 pairs the publisher was the same. So only few publishers do translations and the ones that do tend to do that for their own works or in fixed partneships. | |

| − | + | Articles with are in the CrossRef database as a translation, do not often have information on translations. So publishers mostly do not see the "original" as a translation of the translation. Or put in another way the publishers do not see all language versions as equal, but tend to see one as original and all other versions as translations. | |

| − | + | === Languages === | |

| + | Next to the URL-translations, also many of the CrossRef database entries of article that are or have translations do not have information on the language. 2033 of such DOIs, so about a third, contained information on the language the work is written in. Furthermore, in quick eyeball estimation, a few percent of cases the language in the database does not match the language of the title. It is quite common that the language is encoded in the URL or even in the DOI. This is not done in a standard way, although ISO language codes are generally used and the language code is often an extension (DOI) or a subdirectory (URL). | ||

| − | + | Of the translation for which we have information 2461 are in English, 154 in Norwegian, 44 in Spanish, 43 in Portuguese, 8 in Russian and 7 in Italian according to the metadata. The works that have translations are in Russian 279 times and in English 121, in Norwegian 114, in Portuguese 8 and in Italian 5 times according to the metadata. | |

| − | + | When it comes to pairs of original articles and their translation the situation is worse. Of the 6282 records, 1031 articles are translated from Russian to English, 2 from English to Spanish and 1 from English to Portuguese. That is 16%. For the others we either do not have the language (of one or both) or the language of original and translation is the same according to the metadata; we have 268 Norwegian-Norwegian pairs, as well as 243 English-English, 44 Portuguese-Portuguese and 10 Italian-Italian pairs. | |

| − | + | == Discussion == | |

| + | Likely many journals also have translations from before the time they started adding this information to CrossRef. As it is a limited set of publishers and journals, we could contact them. For example, the publisher Pleiades, which put so many articles into the database in 2021, has existed since 1971 and may well have a large back catalogue. | ||

| − | + | The translations in the CrossRef database may have very different properties from translations that are not in CrossRef, for example, translations made by research institutions or libraries (and published on their homepage) or made by individual scholars (and uploaded to repositories). | |

| + | |||

| + | As information on the language is often missing, we would need a method to estimate this from the title (and if possible abstract). There are many tools to estimate the language of a text. The shorter the text (a title) the higher the error rate. It would be great to have a method that indicates how accurate the estimate is, to make it easier to combine it with other methods to guess the language (such as the DOI, URL or location of the publisher), as well as to highlight the difficult cases for manual checks. | ||

Latest revision as of 16:25, 4 June 2022

CrossRef is an organization of scientific publishers that manages the Digital Object Identifiers given to scientific articles. As a consequence they have a large database with scientific works, some of which are or have translations. The CrossRef database has an API from which we can request the records of works that are (filter=relation.type:is-translation-of) or have (filter=relation.type:has-translation) translations. The code we used to download and analyse the data can be found on our Codeberg GIT repository. This is not a scientific study, some of the results below (especially the ones pointing to problems) may no longer be produced (as the problems have been fixed).

Results

CrossRef currently knows 4142 translations, while only 2152 works have a translation. This makes sense as translated articles are often translated into many languages, although it could have been different as translations quite commonly do not have a DOI, but are only linked to with a URL, and thus do not have an entry themselves in the CrossRef database.

Publishers that publish translations tend to translate many. On average a publisher that publishes translations publishes 72 translations and the number of publishers that have more than 100 translations is only seven. Similarly an average journal that publishes translations publishes 36 translations.

Translations by year

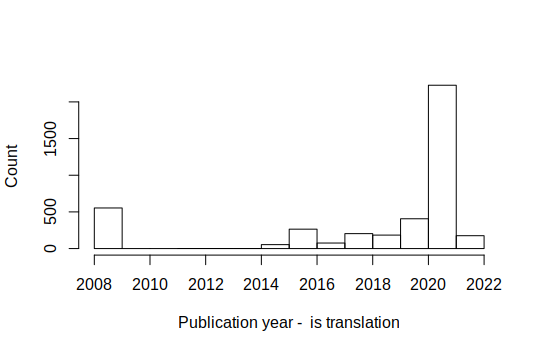

The graph with the number of translations published per year has some suspicious peaks at both ends, but this seems to be okay. The peak in 2021 is due to a large number of articles entered into the record by Pleiades Publishing, which specializes in making translations. While the peak in the first year, 2008, is due to an anatomical atlas, which was published in 9 languages and was split in many parts.

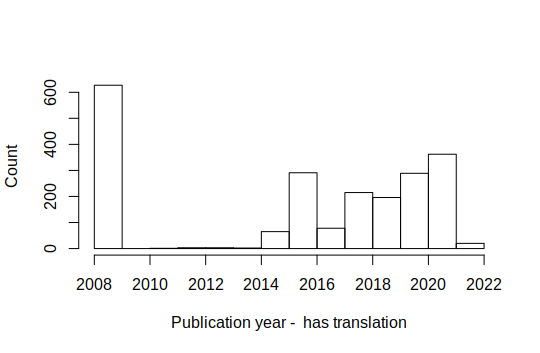

Also the peak in the first year of the graph for works that have translations is due to the anatomical atlas.

Where are the translations?

Many works that have a translation point to a URI (generally a URL), not a DOI. So further information on these translations is not in the CrossRef database. Especially when you look at the number of translations (rather than the number of translated works) URIs are common. Works pointing to an URI tend to have multiple translations. Information on these translations (language, title, translator, ...) is not available and will have to be scrapped.

The works that have a translation point to a DOI 1466 times and to a URL 750 times (when they pointed to both, I have assumed these were double and only counted the DOIs). The 1466 works pointing to a DOI link to 2311 translations with a DOI, while the 750 works that point to a URL link to 5987 translations. This is to a large part due to the anatomical atlas, which is available in nine languages.

CrossRef has 4231 articles, which are marked as being a translation. They tend to point to an original with a DOI (4217 times), while only 14 point to an original with a URI. These originals with a DOI should mostly be in the CrossRef database (except if the DOI is wrong), but only 1256 of these were in my dataset of articles that have a translation. In this case, with a few exceptions, the publisher of the original and the translation are the same. It looks like the person uploading the data for the translation does not have permission to also update the information of the original (by another publisher), but has to contact the publisher of the original and ask them to enter the new additional information.

The records that have or are translations point to 6339 documents with a DOI that are or have translations. We downloaded these records to get more information on the linked articles. Of these 6339 DOIs 215 DOIs were apparently wrong, the CrossRef database did not return any data for them. Although we found by coincidence that in one case doi.org did return a result; so maybe this was a DataCite DOI; is there a way to see where a DOI is registered?

To study how many publishers are involved we used the "publisher prefix" of the DOI (the part before the first "/") and not the metadata publisher name as the name can be written in many ways. Theoretically the publisher of an article can change, in this case we would analyse the first publisher of the articles, not the current one. We found that 75 publishers published the originals, 57 publishers publish translations and 83 publishers published either originals or translations. Furthermore, there are only 84 pairs of publishers, where a pair would be one publisher of an original together with the publisher of a translation. In most cases publishers publish their own translations; for 3769 of 6282 pairs the publisher was the same. So only few publishers do translations and the ones that do tend to do that for their own works or in fixed partneships.

Articles with are in the CrossRef database as a translation, do not often have information on translations. So publishers mostly do not see the "original" as a translation of the translation. Or put in another way the publishers do not see all language versions as equal, but tend to see one as original and all other versions as translations.

Languages

Next to the URL-translations, also many of the CrossRef database entries of article that are or have translations do not have information on the language. 2033 of such DOIs, so about a third, contained information on the language the work is written in. Furthermore, in quick eyeball estimation, a few percent of cases the language in the database does not match the language of the title. It is quite common that the language is encoded in the URL or even in the DOI. This is not done in a standard way, although ISO language codes are generally used and the language code is often an extension (DOI) or a subdirectory (URL).

Of the translation for which we have information 2461 are in English, 154 in Norwegian, 44 in Spanish, 43 in Portuguese, 8 in Russian and 7 in Italian according to the metadata. The works that have translations are in Russian 279 times and in English 121, in Norwegian 114, in Portuguese 8 and in Italian 5 times according to the metadata.

When it comes to pairs of original articles and their translation the situation is worse. Of the 6282 records, 1031 articles are translated from Russian to English, 2 from English to Spanish and 1 from English to Portuguese. That is 16%. For the others we either do not have the language (of one or both) or the language of original and translation is the same according to the metadata; we have 268 Norwegian-Norwegian pairs, as well as 243 English-English, 44 Portuguese-Portuguese and 10 Italian-Italian pairs.

Discussion

Likely many journals also have translations from before the time they started adding this information to CrossRef. As it is a limited set of publishers and journals, we could contact them. For example, the publisher Pleiades, which put so many articles into the database in 2021, has existed since 1971 and may well have a large back catalogue.

The translations in the CrossRef database may have very different properties from translations that are not in CrossRef, for example, translations made by research institutions or libraries (and published on their homepage) or made by individual scholars (and uploaded to repositories).

As information on the language is often missing, we would need a method to estimate this from the title (and if possible abstract). There are many tools to estimate the language of a text. The shorter the text (a title) the higher the error rate. It would be great to have a method that indicates how accurate the estimate is, to make it easier to combine it with other methods to guess the language (such as the DOI, URL or location of the publisher), as well as to highlight the difficult cases for manual checks.